What is Jitter in Networking?

What is jitter and how do we measure it? A general overview of jitter in computer networks.

What is jitter and how do we measure it? A general overview of jitter in computer networks.

Jitter is defined as the unwanted or too large of a variation in the time delay between when a signal is transmitted and when it is received over a network connection. In other words, a network jitter is a variance in latency between packets sent over the network.

Jitter mostly influences the quality of streamed content, like video streaming, online gaming, and voice over the internet (VoIP), resulting in poor quality, delays, increased response time, and dropped packets. Network congestion, hardware limitations and malfunctions, routing changes, and other network anomalies can all cause jitter.

Jitter in computer networks is a variance in latency. In networks, this specifically means the disruption in the sequence of arriving or leaving packets from a device.

The variance is generally measured in milliseconds (ms), where good connections have a reliable and consistent response time and where bad connections with a high level of jitter have an unreliable and inconsistent response time. So it influences the packet network traversal time and packet loss in the network.

Hardware limitation or malfunction - can provide unreliable network links and can unexpectedly reduce the bandwidth and increase the packet travel time.

Wireless connections - inherently provide less reliable network links which can induce reduced bandwidth and increased packet travel time.

Missing or misconfigured packet prioritization - if packets of bandwidth and latency-sensitive applications are not prioritized, the applications might notice reduced bandwidth and increased packet travel time.

We can measure jitter with multiple methods:

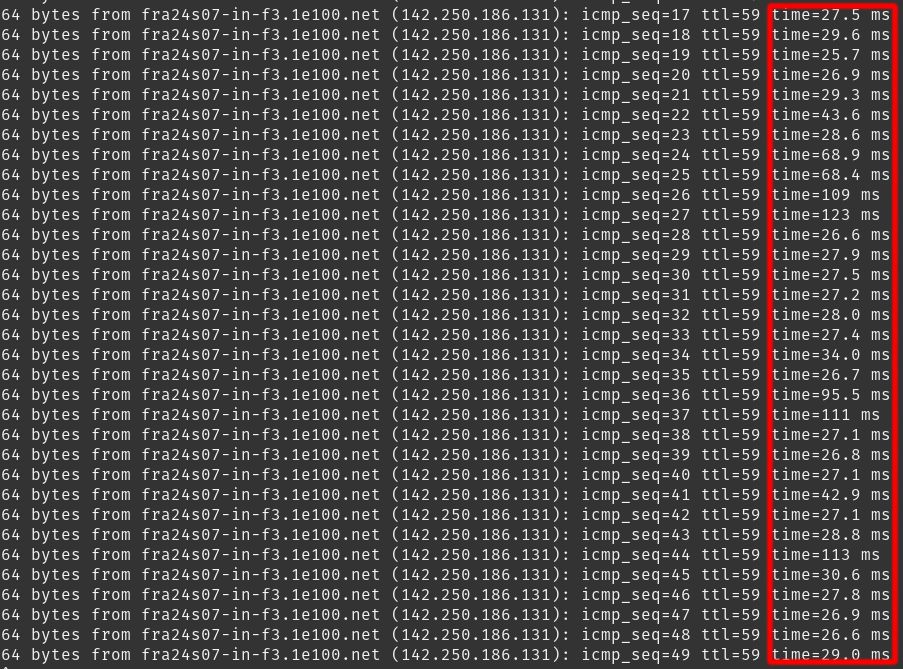

One of the most common tools for measuring jitter is ping, which takes the differences between two consecutive packet travel times and calculates their mean value to get the average jitter in the network. We can calculate jitter for the following example:

$$\frac{|27.5-29.6|+|29.6-25.7|+...+|26.6-29.0|}{33}=19.9 $$

With the ability to manage the network's inner workings with various mechanisms and technologies that fall under the name Quality of Services (QoS), we are able to mitigate jitter entirely or at least partially. The main mechanisms are:

Properly designed networks can help reduce jitter by making traffic more predictable. We can also monitor the network, its latency, and its bandwidth to detect network congestion and other issues so we can fix them before they become problematic. We can also try to reduce unnecessary bandwidth usage during work hours by scheduling updates and backups outside of business hours.

Services that require constant low latency and high bandwidth, like video conferences and VoIP generally implement jitter buffering that intentionally delays incoming data packets. Buffering can cause poor call quality if the buffer isn't timed correctly. If the buffer is too small, then too many packets may be discarded. If the buffer is too large, then the additional delay can cause issues.

For a home user, usually, the wireless network link is the main reason for the unpredictable network latency and bandwidth reductions. Users can upgrade their wireless equipment or switch to physical Ethernet cables whenever possible if they need constant low latency and high bandwidth.