What Is Network Latency and how can we reduce it?

What is latency and what can we do to reduce it?

What is latency and what can we do to reduce it?

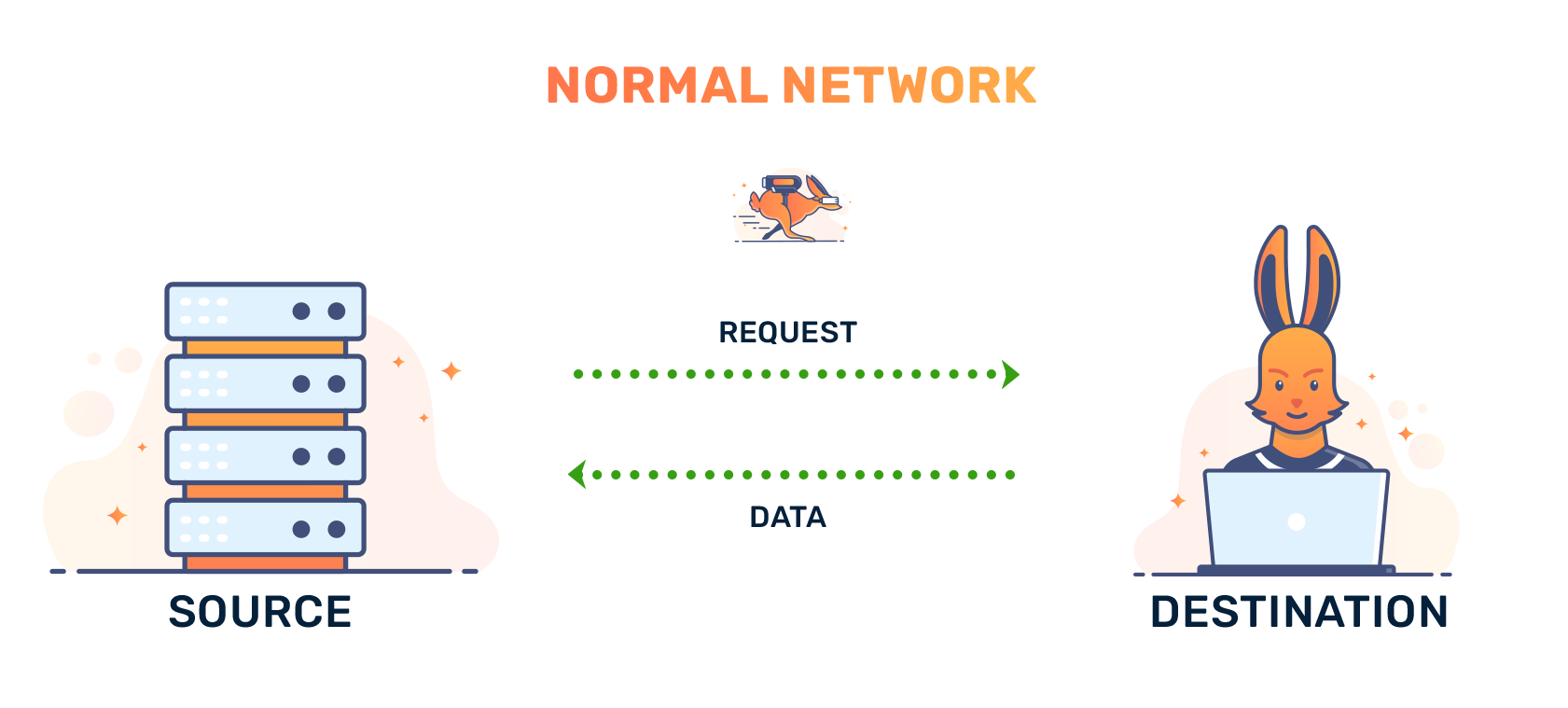

In general terms, latency is the time delay between a user’s action and the response to the action. In an online setting, latency would be the time delay between an action from a user who is interacting with a website (e.g. clicking on a link) or a software application (e.g. tapping send on WhatsApp) and the response (complete loading of web page or double-tick delivery receipt on WhatsApp).

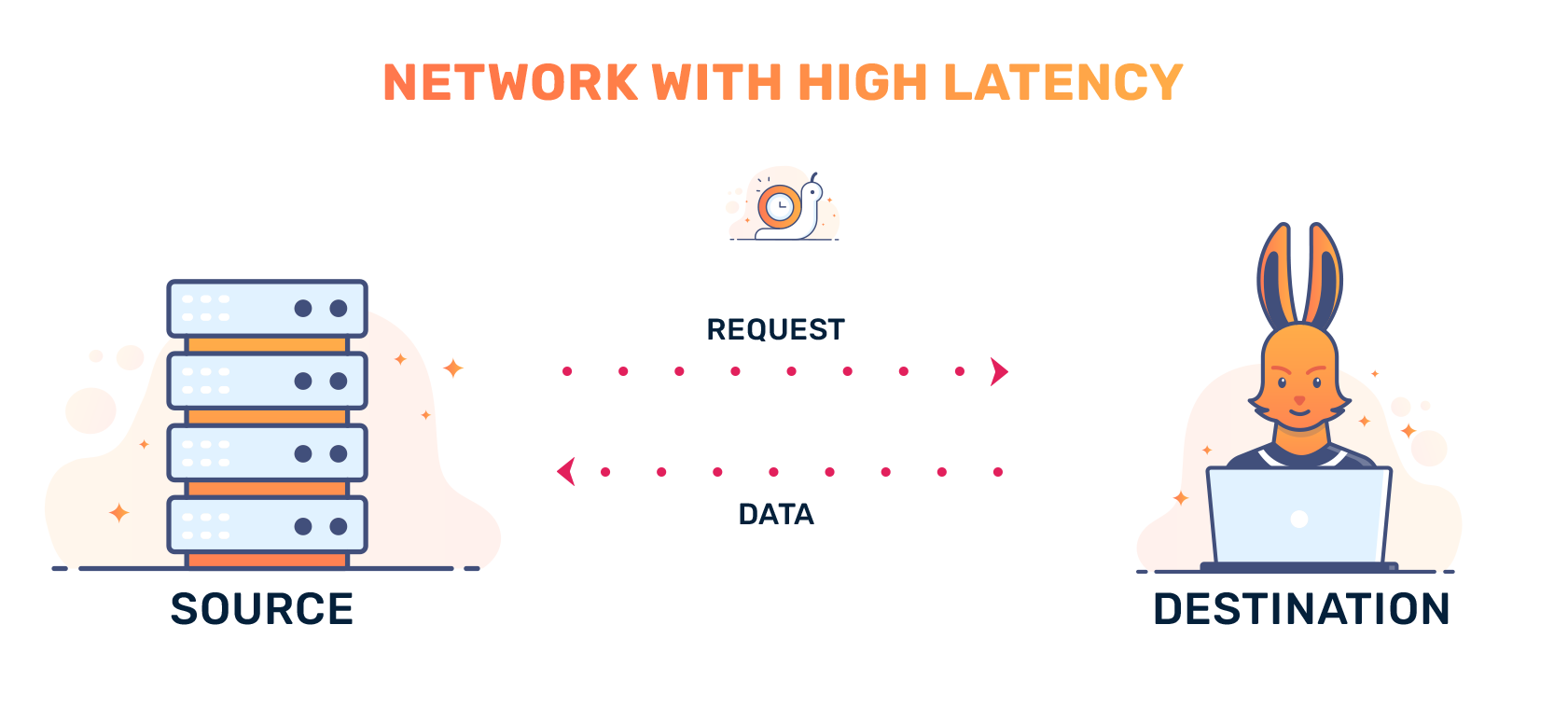

Although data on the Internet can travel very quickly (at the speed of light for fiber optic cables), latency cannot be completely eliminated due to reasons such as distance and networking routing. In a worst-case scenario, latency can become so high that packets end up being lost -- thus, keeping Internet latency to a minimum is important. Lower network latency increases website performance and user satisfaction; it can even affect search engine optimization (SEO) performance.

Network latency, throughput, and bandwidth can all affect the performance of your website or web application. Although they are highly-related, they are distinct terms. Bandwidth refers to the maximum amount of data that can pass through in a given amount of time. For example, a 100 Mbps bandwidth connection allows a theoretical maximum of 12.5 (100 / 8 = 12.5) megabytes of data to pass through every second. Throughput refers to the average amount of data that actually passes through. Throughput may not reach the maximum theoretical bandwidth because of latency. Increased latency means time is wasted on waiting for responses instead of data transmission. Thus, maximum throughput is a function of bandwidth (the higher the better) and latency (the lower the better).

Several different factors can contribute to increased latency in website and application performance.

Internet latency can be reduced at two ends: the user end and the web server end. On the user’s end, switching to fiber optic connections and using wired Ethernet connections instead of wireless connections will significantly reduce the amount of Internet latency.

On the web server’s end, to mitigate the latency effects resulting from geographical distance and routing, content delivery networks (CDNs) can be used. CDNs often have multiple servers in different continents, which greatly reduces the physical distance that content has to travel to the end user and the number of different networks that data packets have to be routed.

Web designers can also reduce the effects of latency by using several techniques. They can optimize images to make them smaller, eliminate render-blocking resources by moving <script> or <link> tags to the bottom of a page’s HTML code, or minify JavaScript and CSS files.

A measure of the time between a request being made and fulfilled. Latency is usually measured in milliseconds.

The percentage of packets that get lost between a destination and a source.