What is Caching and how does a Cache work?

A gentle primer into HTTP and CDN Caching

A gentle primer into HTTP and CDN Caching

Caching means storing items in a temporary location, or cache, to be accessed more easily later. The term cache doesn't just apply to computers. For example, the researchers in the first successful expedition to the South Pole stacked, or cached, food supplies along the route on their way there so they would have food to eat when they returned. Caching was much more practical than having supplies routinely delivered from their base camp.

In computing, caching serves a similar purpose: when a client needs data, it has to fetch it from storage. Since data transfer can be slow, after we transfer data to the place of processing for the first time we tend to cache a copy of it next to the processing unit. If it turns out we need it later, we can save a storage round-trip. This gives us a significant reduction in latency.

Here are some examples of caching in computing:

This article focuses on HTTP and CDN caching.

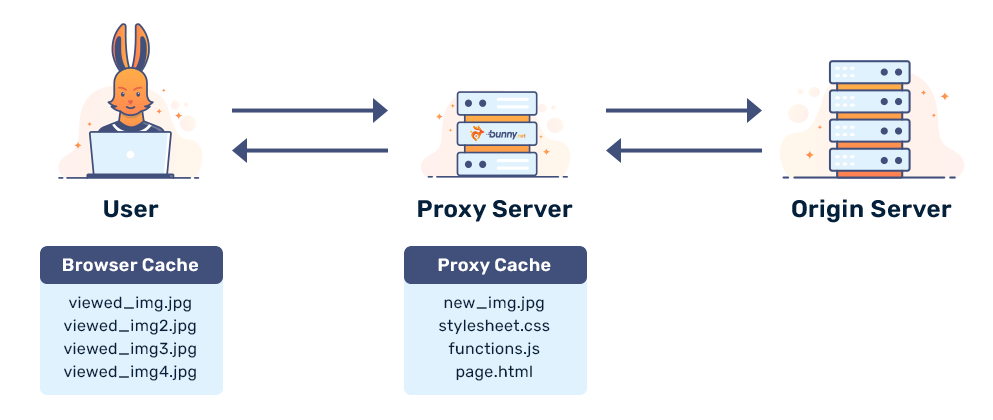

We have two types of caches in HTTP: private and shared.

A private cache is a cache specific to a particular client, like a web browser. Private caches can store client-specific content that may contain sensitive information.

A shared cache, on the other hand, resides between the client and the origin server and stores content that is shared among multiple clients. Such caches may not contain sensitive content.

The most common type of a shared cache is a managed cache. Managed caches are purposely set up to reduce the load on the origin server, and to improve content delivery. Examples include reverse proxies, CDNs, and service workers in combination with the Cache API.

Managed caches are called managed because they allow administrators to manage cached contents directly through administration panels, service calls, or a similar mechanism. This is in contrast to other types of caches, which are managed by the server only through the Cache-Control response header field.

Here's a few examples of management capabilities:

Even though many websites today are dynamic, meaning they generate content on-the-fly, they still contain a lot of static content. Images, video and audio files, JavaScript, CSS resources, and file archives are all examples of static content. If these resources are cached on locations closer to the end-users, such as on a CDN, the origin server saves bandwidth while the end-users receive content faster and more reliably.

When content is requested for the first time, the request is sent to the origin server. The origin server returns requested resources. For every resource that is returned, the origin server sets a caching policy which tells if the resource can be cached, where, and for how long.

If a user requests the resource in the cache, they receive the cached copy. They can get this copy either from a private cache in their browser, or from a shared one in the CDN. The HTTP request is either saved or sent to an edge server that is closer than the origin server.

A resource in cache is in one of two states: fresh or stale. A fresh resource is valid and can be used immediately while a stale resource has expired and needs to be validated.

The driving factor in deciding whether a resource is stale is its age. In HTTP, this can be established either by examining the time it was fetched, or, even simpler, by inspecting the resource's version number.

When a cached resource becomes stale, we don't discard it immediately. Instead, we can restore its freshness by asking the origin server if the resource has changed. This is achieved with a conditional HTTP request. A conditional HTTP request contains an extra header specifying the time the cached resource was created or the version of the content that is cached.

If it turns out that the the stale resource didn't change, the origin server responds with a response code 304 Not Modified. This is the origin server's feedback stating that the cached version is up-to-date. You might notice that this is a very short message. 304 Not Modified is a head-only response that contains no body. If the resource was changed, the server returns a normal 200 OK response where the response body contains the updated resource.

This process is called validation, or revalidation.